Context to Confidence: The Next Phase of Ambiguous Techniques Research

MITRE CTID’s latest ambiguous techniques research turns context into confidence with minimum telemetry requirements and a confidence scoring …

By Mike Cunningham and Antonia Feffer • February 19, 2026

An ambiguous technique is a MITRE ATT&CK® technique whose observable characteristics are insufficient to determine intent. Typical observable data does not allow us to confidently ascertain whether the intent behind the activity is malicious or benign. These techniques sit in the gray space between malicious and benign, but we do not have to treat that gray space as a black box.

In our first Ambiguous Techniques release, we showed how context – peripheral, chain‑level, and technique‑level – helps defenders determine intent when a ATT&CK technique looks benign on the surface. In this phase of research, we move from context to confidence and identify the minimum telemetry requirements needed to detect a technique. Our project participants, Citigroup, CrowdStrike, Fujitsu, Fortinet, HCA Healthcare, Lloyds Banking Group, and Microsoft Corporation, battled-tested and validated our confidence scoring algorithm.

With this latest Ambiguous Techniques release, MITRE Center for Threat-Informed Defense is confident that adversaries won’t stand a chance against threat-informed defenders.

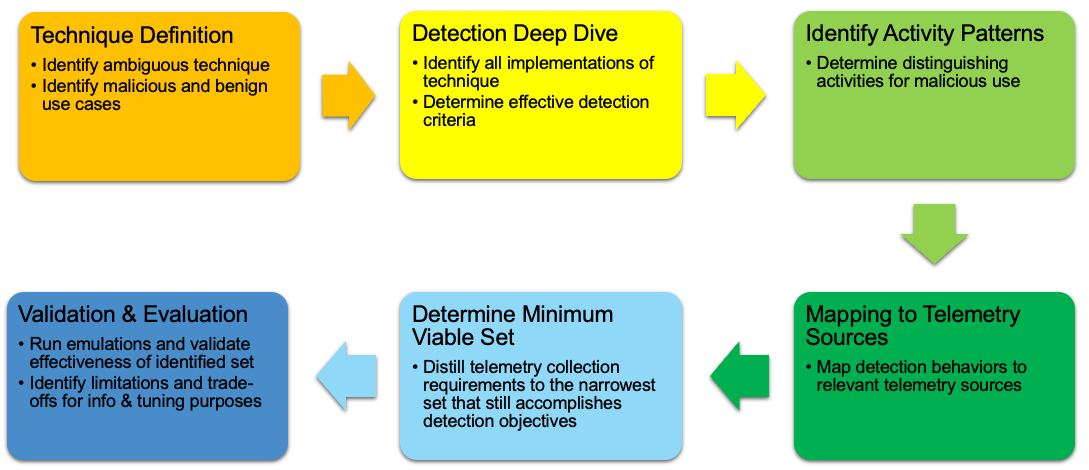

Our first goal in this follow‑on effort was to identify minimum telemetry requirements for detecting ambiguous techniques in a way that actually helps defenders. We did not want a theoretical superset of every log that could apply. We wanted the smallest set of log sources and fields that still support robust, high‑value detections.

To get there, we decomposed our initial set of techniques by applying a three‑pronged research process. We started from the ATT&CK technique page to enumerate relevant data components, then pivoted our Sensor Mappings to ATT&CK database to discover concrete log sources for each component, and finally cross‑checked those candidates against public analytic repositories such as Sigma and Elastic to see which sources practitioners already rely on.

This iterative cycle produced much richer log source catalogs than any single source alone and helped us distinguish which fields matter most when you want to reduce false positives instead of just collecting more data.

During this analysis, we realized that minimum telemetry cannot ignore quality. Some log sources technically see the behavior but do so in brittle, noisy, or delayed ways that will not support effective detections against ambiguous techniques.

We therefore treated minimum telemetry as the intersection of two conditions:

Our methodology documents which log sources to prioritize for a given technique, as well as their dependencies, useful fields, and the role of each source (e.g. primary detection signal vs supporting role). The result is a repeatable process defenders can use to build or refine their own minimum telemetry sets instead of relying on trial and error.

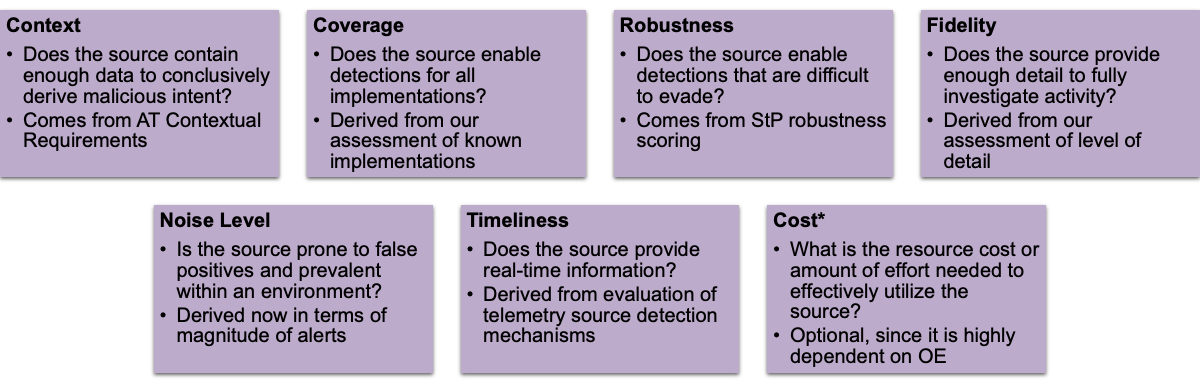

Defining minimum telemetry is necessary, but it still leaves an important question: if you cannot collect everything, then which log sources do you choose? To answer that, we developed a confidence scoring approach that ranks log sources relative to one another for a given technique or use case.

Our confidence scoring model rests on six metrics, plus an optional one. Three metrics describe intrinsic log source quality:

These characteristics hold largely independent of the specific technique and help you understand whether a source can ever support high‑quality detections.

The other three metrics depend on the technique or use case:

Together, these metrics translate familiar qualitative discussions – “this log seems useful” – into structured, comparable scores.

We also list cost as a metric, but we kept it separate from the formal confidence score. Cost is inherently team‑specific, yet it often drives real‑world deployment decisions, so we designed the model to support local tuning without diluting the underlying measures of detection value.

We started by scoring log sources for individual ambiguous techniques, but that approach did not reflect how attackers actually operate. As a result, security teams must design detections and telemetry strategies for broader objectives – like credential abuse or command‑and‑control over legitimate channels – rather than one technique at a time.

To align with that reality, we expanded our process to use cases: groupings of ambiguous techniques that share an adversary objective. We then trained an AI model to scale the scoring process across all identified use cases. By supplying the model with clear objectives, background documents, technique lists, log source candidates, and detailed scoring rubrics, we were able to reproduce and extend our manual analysis while constraining drift and hallucinations. That pipeline gave us a practical way to evaluate many more combinations of techniques and telemetry than would be feasible by hand.

We cover concrete use cases – such as execution via scripting languages, native OS feature abuse, and command‑and‑control over legitimate channels – in our project materials. You can explore those examples, including full scoring tables and key design takeaways on our detection engineering page.

We built this phase of ambiguous techniques research to help you move from intuition to evidence when you design detection strategies. The minimum telemetry methodology and confidence scoring model provide a data‑driven, repeatable way to prioritize log sources, close visibility gaps, and justify investments to your leadership.

Now we want to see how this work performs in your environment. Put our detection strategies to use.

Start with one or two ambiguous techniques or use cases that matter most to your program, apply the minimum telemetry and confidence scoring guidance, and observe how your false positives, analyst workload, and missed detections change over time. Then tell us what you learned by emailing us at ctid@mitre.org.

Share with us what matched your experience, where the model diverged, and what additional telemetry or metrics you needed and we will refine this framework together for the broader community of defenders.

© 2026 The MITRE Corporation. Approved for Public Release. ALL RIGHTS RESERVED. Document number

26-0334.

MITRE CTID’s latest ambiguous techniques research turns context into confidence with minimum telemetry requirements and a confidence scoring …

Threat-informed defense changes the game on the adversary. Threat-informed defenders read their adversaries’ playbooks and then orchestrate a …

MITRE ATLAS™ analyzed OpenClaw incidents that showcase how AI-first ecosystems introduce new exploit execution paths. OpenClaw is unique because …