A Threat-Informed Community is Necessary for Defense to Function

Threat-informed defense changes the game on the adversary. Threat-informed defenders read their adversaries’ playbooks and then orchestrate a …

By Lex Crumpton, Allison Henao and Amy L. Robertson • August 4, 2025

The exploitation of critical zero-day vulnerabilities in Microsoft SharePoint highlights that adversaries don’t always need new tools to succeed. By chaining familiar techniques with newly discovered flaws, they can bypass defenses without deploying novel malware or infrastructure. Sometimes, all it takes is a gap in how defenders prioritize or perceive risk. Vulnerable organizations should review CISA’s alert and Microsoft’s customer guidance to mitigate potential attacks. But even with patches available and visibility in place, adversaries can still exploit overlooked system behavior, leading defenders to ask a hard question:

“How do we detect and stop this kind of attack at scale?”

In many cases, by the time there’s a signature, it’s already too late – and that’s why this post isn’t another write-up about a zero-day. It’s a conversation about a practical approach to detection, one that’s rooted in behavior and not just signatures. When defenders understand how adversaries think, move, exploit trust, and chain behaviors, they can actively build strategies that surface real threats, even without knowing the exploit.

We hope this approach supports fellow defenders in building a proactive, flexible detection strategy that surfaces adversary activity even when the vulnerability is new or unknown.

Note: Please look towards the end of the blog for the list of MITRE ATT&CK® techniques discovered from the vulnerability.

When CVE-2025-53770 was initially exploited, there weren’t indicators of compromise (IOCs), YARA rules, and or pre-packaged signatures. But the adversary behavior still left a trail, just not one that most defenses were tuned to follow. The challenge wasn’t due to a lack of tools, but a focus on the wrong signals. Zero-days don’t look like known vulnerabilities, but the behaviors they unlock often do.

Exploits may be novel, but adversaries usually follow predictable patterns once they’ve gained entry. From abusing system trust, executing commands from unexpected services, dropping files to gain persistence, or making outbound connections, behavior-based detections are critical because they work, even when the initial entry point is unknown. These behaviors form a causal chain of activity, a connected chain of observable events that tell the story of the compromise.

CVE-2025-53770 allowed the unauthenticated adversaries to trigger remote code execution in Microsoft SharePoint by sending a crafted request to the ToolPane.aspx endpoint. While the initial vector was new, the attacker’s post-intrusion behavior wasn’t:

Unlike static IOCs, causal chains help detect the “what” and “why” of an attack, not just “which tool”. That’s why behavior-first detection engineering is no longer optional, it’s a requirement. It allows defenders to move from: “Do we recognize this payload?” to “Do we recognize what adversaries do, no matter how they get in?”

Even with skilled teams and advanced security solutions, many organizations miss behaviors that don’t match known signatures. Why? Because they focus on specific tools or payloads rather than behavioral intent.

Detection strategies should be aligned with how real adversaries operate. That means pulling behaviors from threat intelligence, modeling attack chains, and creating logic that shines light on intent rather than artifacts.

Hypothesis testing isn’t just a theoretical exercise; it’s a practical way to simulate how trusted features might be misused and explore what those actions would look like in your environment. Blending adversary behavior with a clear understanding of how systems work, enables a shift from assumed visibility to proven detection helps you validate detection coverage in realistic terms.

For example, defenders might ask:

Threat-informed hypothesis testing helps teams move beyond static rules and signature dependence by turning threat intelligence into action. It blends insight into adversary behavior with a deep understanding of system internals, so you can proactively validate whether you’re truly prepared for real-world tradecraft. A core element of threat-informed hypothesis testing is defensive curiosity, the drive to ask, “What if?” before an adversary answers it for you.

Resources like Summiting the Pyramid, Technique Inference Engine, and Attack Flow offer different ways to reason about adversary behavior, from forming hypotheses at the behavioral level to mapping raw data to techniques and visualizing how those techniques unfold over time.

CVE-2025-53770 abused trusted services in an unexpected way, and that’s exactly the kind of scenario hypothesis testing is designed for.

In the following four examples, we walk through four hypotheses that model how an attacker might move through the environment after exploiting this vulnerability. Each test is grounded in some of the real techniques observed in recent intrusions and are meant to help you think through current visibility and detection logic.

T1059.001: Command and Scripting Interpreter: PowerShell

w3wp.exe to execute PowerShell.w3wp.exe ever launch child processes in our environment?Test it: Run a harmless PowerShell command via w3wp.exe in a dev or purple team environment.

Check: Is it logged? Alerted on? Does it match baselines?

Detection goal: Flag non-standard interpreter launches by service processes, regardless of payload content.

T1505.003: Server Software Component: Web Shell

spinstall0.aspx to _layouts/15/, a path that auto-serves contentTest it: Drop a benign .txt or .aspx file into _layouts/15/ using different accounts.

Monitor: file creation, access logs, and application behavior.

Detection goal: Alert on unexpected file writes to web-exposed directories by unusual users or processes.

T1552.001: Unsecured Credentials: Credentials In Files

MachineKey values from web.config and machine.config to forge tokens.w3wp.exe outside of startup?Test it: Access the files manually using PowerShell or a service account.

Monitor: file read events, Windows Security logs, or EDR telemetry.

Detection goal: Detect rare or first-time access to sensitive config files by unexpected accounts or services.

Note: This hypothesis is scoped to file access detection. Some variants may access cached configuration via memory using .NET APIs, which would require separate instrumentation.

T1071.001: Application Layer Protocol: Web Protocols

Referer: /_layouts/SignOut.aspx) to bypass filters.Test it: Replay POST requests with alternate Referer values (e.g., /error.aspx, /login.aspx, or nonexistent pages) in a dev/test environment.

Review: WAF, IIS, or proxy logs for abnormal header use targeting known admin endpoints.

Detection goal: Monitor for forged or unusual Referer headers in application POST traffic, especially those imitating legitimate navigation paths to subvert validation logic.

Behavior-driven testing doesn’t need a major program. It just requires a structured way to explore how adversary behavior would unfold in your own environment. The following five-step process offers a practical method to move from assumed coverage to proven detection.

“What does this system or feature allow an attacker to do if misused?”

Start with a category of access (e.g., unauthenticated HTTP access, config file read, interpreter execution) or a system feature (e.g., VIEWSTATE, ToolShell, SMB access). Don’t wait for the CVE, look at the design assumptions.

“If an attacker had this access, what could they do next?”

Use ATT&CK for inspiration. Frame the behavior chain as a realistic misuse of system trust boundaries, even if no known exploit exists. E.g., “If the web service runs deserialized code, could it launch PowerShell?”

“Can I emulate this behavior safely to validate logging and detection?”

Emulate the behavior (not the exploit). Examples:

Use purple team infrastructure or pre-production where possible.

“What logs are generated? Do existing rules catch it? What’s missing?”

Correlate logs across time, identity, system, and activity:

Document blind spots, noise, or places where causality is lost.

“What detection goals should we create? What baseline is normal?”

Turn insights into flexible, behavior-based detection logic. Flag anomalies based on:

Building a sustainable detection strategy rooted in adversary behavior means treating emulation as a regular practice, not a one-time or even periodic red team event. Continuous emulation takes the behaviors observed in the wild and safely replicates them in your own environment to see what is observable, what gets missed, and what could be improved (people, processes, and technology). When defenders understand how adversaries think, move, and chain together behaviors to exploit trust and gain control, they can emulate that tradecraft to test their own readiness.

Adversary emulation is exactly what it sounds like: mirroring the types of behaviors adversaries have used in the wild, but in a safe and controlled way. Instead of focusing on recreating specific exploits or only relying on known malware, defenders can walk through how adversaries operate, from misusing legitimate tools, escalating access, or quietly moving through systems. These realistic drills help answer the core question: Would we catch this if it happened here?

Rather than asking, “Would we detect CVE-2025-53770?” a more useful question that emulation could help answer is:

“Would we detect any web service executing unauthorized commands?”

Using adversary emulation tools like MITRE Caldera, Atomic Red Team, or the free resources in the ATT&CK Evaluations Adversary Emulation Library can help teams safely replicate core behaviors (e.g., PowerShell spawning from w3wp.exe) without knowing the exact exploit. Taking this approach, early signs could have surfaced and alerted on:

CVE-2025-53770 is a SharePoint vulnerability, but more than that, it’s a lesson in how assumptions about system behavior can be exploited, even in mature environments. Strong tooling is important, but operational readiness depends on the questions we’re willing to ask ahead of time:

You can’t predict the next zero-day, but you can build a process that helps you detect the behaviors behind it. That starts by turning threat intelligence into hypotheses and making continuous emulation a regular part of your detection engineering process.

The next attack may not look exactly like this one, but the behavior patterns will feel familiar, especially if you’ve practiced for it.

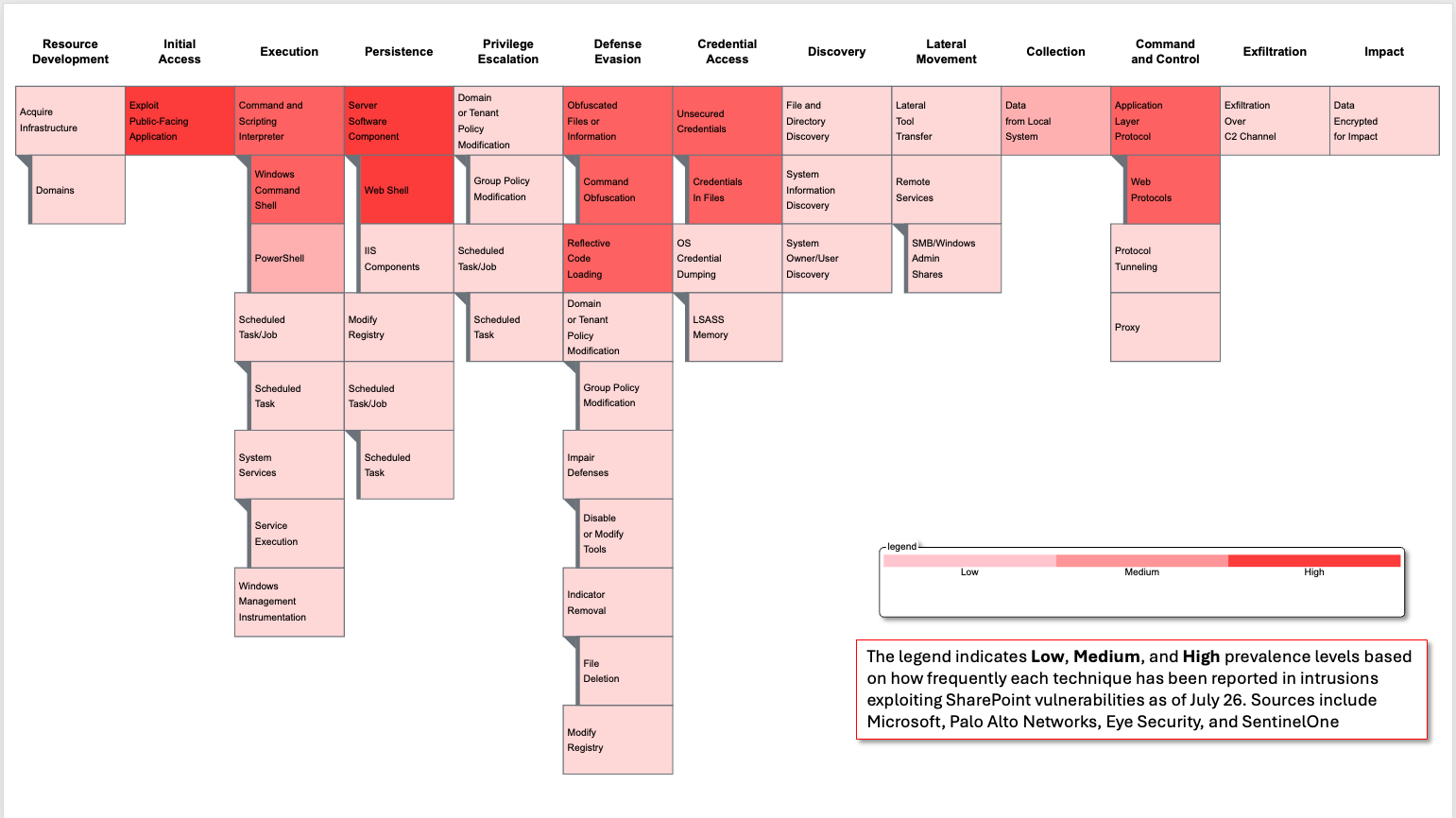

The ATT&CK Tactics, Techniques, and Procedures (TTPs) outlined below reflect the initial observed activity associated with the exploitation of the SharePoint vulnerabilities. These mapped behaviors provide an early view of adversarial operational flows but should not be considered comprehensive. This view will continue to evolve as more technical reporting and analysis become available.

| ID | Technique Name | Procedure |

|---|---|---|

| Resource Development | ||

| T1583.001 | Acquire Infrastructure: Domains | The adversary established command and control that typo squatted or spoofed Microsoft through STORM-2603 use of the C2 domain update[.]updatemicfosoft[.]com |

| Initial Access | ||

| T1190 | Exploit Public-Facing Application | The adversary sends a crafted POST request to /_layouts/15/ToolPane.aspx exploiting a deserialization flaw(CVE-2025-53770) and bypassed authentication through CVE-2025-53771, allowing unauthenticated RCE. Other adversaries sent variations of .aspx files. |

| Execution | ||

| T1059.001 | Command and Scripting Interpreter:PowerShell | The w3wp.exe process invokes PowerShell via the deserialized payload (System.Diagnostics.Process), executing attacker-controlled encoded commands. |

| T1059.003 | Command and Scripting Interpreter: Windows Command Shell | The attackers utilized cmd.exe and batch scripts within the victim environment. |

| T1569.002 | System Services: Service Execution | The adversaries used services.exe to disable Defender via registry keys. The adversaries also leveraged PsExec for execution of commands. |

| T1047 | Windows Management Instrumentation | The adversary used WMI to execute commands. |

| Persistence | ||

| T1505.003 | Server Software Component: Web Shell | Malicious .aspx web shell(spinstall0.aspx) is written to the _layouts/15/ directory, granting persistent HTTP-based access to the SharePoint server. |

| T1053.005 | Scheduled Task/Job: Scheduled Task | The adversary (STORM-2603) created schedule tasks to establish persistence. |

| T1505.004 | Server Software Component: IIS Components | The adversary (STORM-2603) modifiedInternet Information Services (IIS) components to load suspicious .NET assemblies to maintain persistence. |

| Collection | ||

| T1005 | Data from Local System | The attackers extract information from the compromised system. |

| Defense Evasion | ||

| T1027.010 | Obfuscated Files or Information: Command Obfuscation | The adversaries encoded PowerShell commands in Base64. |

| T1620 | Reflective Code Loading | The attackers reflectively loaded payloads using “System.Reflection.Assembly.Load”. |

| T1070.004 | Indicator Removal: File Deletion | Temporary files or logs may be deleted bythe attacker to cover traces post-deployment of the web shell or PowerShell scripts. |

| T1484.001 | Domain or Tenant Policy Modification: Group Policy Modification | The adversary (STORM-2603) modified group policy to distribute ransomware within compromised environments. |

| T1562.001 | Impair Defenses: Disable or ModifyTools | The attacker (STORM-2603) disabled security services via registry to include Microsoft Defender. |

| T1112 | Modify Registry | The attacker (STORM-2603) disabled security services by modifying the registry keys. |

| T1036.005 | Masquerading: Match Legitimate ResourceName or Location | The spinstall0.aspxfile mimicked installer naming conventions, and debug_dev.js resembled legitimate dev assets to avoid suspicion. |

| T1140 | Deobfuscate/Decode Files or Information | The attacker decoded the contents of the files created |

| Credential Access | ||

| T1552.001 | Unsecured Credentials: Credentials in Files | The attacker accesses web.config and machine.config to extract MachineKey values, enabling them to forge legitimate VIEWSTATE tokens for future deserialization payloads. |

| T1003.001 | OS Credential Dumping: LSASS Memory | The attackers used Mimikatz to retrieve credentials from LSASS memory. |

| Command and Control | ||

| T1071.001 | Application Layer Protocol: Web Protocols | The attacker issues HTTP POST requests to the web shell with spoofed or empty Referer headers, circumventing authorization controls. |

| T1090 | Proxy | The attacker utilized a Fast Reverse Proxyto connect to C2. |

| T1572 | Protocol Tunneling | The attacker utilized NGROK tunnel todeliver PowerShell to C2 |

| Discovery | ||

| T1082 | System Information Discovery | The attacker uses command execution to fingerprint the SharePoint system (e.g., OS version, running processes). |

| T1083 | File and Directory Discovery | Commands are run to locate accessible fileshares, backup paths, or SharePoint content. |

| T1033 | System Owner/User Discovery | The attacker executed the “whoami” commandon the victim machine to enumerate user context and validate privilege levels. |

| Exfiltration | ||

| T1041 | Exfiltration Over C2 Channel | Stolen credentials or internal data is encoded and exfiltrated over HTTPS to the attacker's infrastructure. |

| Lateral Movement (Post-Intrusion) | ||

| T1021.001 | Remote Services: SMB/Windows Admin Shares | Adversary uses stolen credentials or tokens to pivot to additional internal systems. |

| T1570 | Lateral Tool Transfer | The adversary (STORM-2603) leveraged Impacket to leverage WMI and execute payloads. |

| Impact | ||

| T1486 | Data Encrypted for Impact | The attacker (STORM-2603) deployed Warlock ransomware on victim environments via GPO. |

© 2025 The MITRE Corporation. Approved for Public Release. ALL RIGHTS RESERVED. Document number

PR_25-01296-2.

Threat-informed defense changes the game on the adversary. Threat-informed defenders read their adversaries’ playbooks and then orchestrate a …

MITRE ATLAS™ analyzed OpenClaw incidents that showcase how AI-first ecosystems introduce new exploit execution paths. OpenClaw is unique because …

Threats to cloud computing span multiple security domains, objectives, and layers of technology. Defenders must protect dynamic, shared …